生成式 AI 的投资回报远超预期?Snowflake 调研全球 1900 位企业与 IT 专业人士后发现平均 ROI 高达 41%!点击下载完整报告

我的灵感来源于 @umeshpatel_us。他投入了大量工作为 Snowflake 账户用量模式创建语义层,这让我不禁思考,Snowflake 何时能直接为我们提供一个包含所有这些内容的语义视图。拥有如此强大的能力和信息,我还能做些什么呢?此外,我的同事 Farris Jaber 撰写了一篇题为《Snowflake 智能是什么》的文章,这进一步促使我从实际应用角度去研究和探索:如何利用这一能力进行对话、诊断、保障安全,并通过 Snowflake 智能控制成本。

根据 Umesh 的文章,其基础在于构建一个语义层映射。我在他的语义层基础上进行了扩展,并创建了另外两个语义层,以更广泛地覆盖 Snowflake 账户用量视图。当然,要实现完全覆盖还需更多工作,但这已能通过一个对话式 AI Agent 为您提供真实信息,从而实现对 Snowflake 模式(包括成本控制、维护与安全)的清晰维护。

请花点时间阅读 Farris Jaber 的文章,它对 Snowflake 智能做了精辟的概述。这项出色的 AI 服务允许用户使用自然语言轻松查询数据,使数据访问更加直观。它利用了由大型语言模型驱动并配备多种工具来访问和解读数据的智能代理。该系统经过精心设计,能够理解您的查询,确定最有效的信息检索方式,执行必要操作,并以清晰、用户友好的方式呈现结果——所有结果都配有图表和富有洞察力的摘要,帮助您轻松理解数据。

最初我决定扩展这项工作,但经过一些尝试后,最终确定创建三个配备语义视图的代理。这些专业化代理旨在让 Snowflake 管理员和平台工程师能够通过对话,直接控制平台最关键的三根支柱:成本、安全和性能。它们开启了快速、有针对性的诊断与洞察,而这在以前只能通过复杂的手动查询来实现。

1.通用型代理(包含以下所有内容):

• 一体化跨领域分析;

• 涵盖 20 个 ACCOUNT_USAGE 表;

• 94 项覆盖所有运营领域的指标;

• 最佳适用场景:整体洞察、跨领域关联分析。

2.成本与性能专家代理:

• 快速聚焦的成本与性能查询;

• 查询执行、积分消耗、资源使用情况;

• 最佳适用场景:快速性能检查、成本分析。

3.安全专家代理

• 专项安全与身份验证监控;

• 登录跟踪、MFA 采用情况、威胁检测;

• 最佳适用场景:安全审计、合规检查。

这种分层方法使 Snowflake 管理员能够快速聚焦于特定领域——无论是紧急的安全审计,还是深入挖掘成本节约机会——使用专为该目的设计的自然语言查询即可实现。

此外,这些语义视图可以直接用于 SQL 查询,这项功能目前正在开发中,旨在实现更广泛的商业智能工具集成。截至目前,只有 Sigma Computing 和 Hex 提供与这些语义视图的原生集成,而对 PowerBI 和 Tableau 等其他主要工具的支持也在积极开发中。您可以查看 @uniquejtx_3744 撰写的这篇关于使用 Cursor 构建语义视图的文章。

供您参考,目前我正在从所有这些视图中提取信息。

我所使用的账户用量视图:

查询与性能(共 2 个视图)

· QUERY_HISTORY (别名:qh)

· QUERY_ATTRIBUTION_HISTORY (别名:qa)

安全与身份验证(共 1 个视图)

· LOGIN_HISTORY (别名:login)

成本与资源使用情况(共 4 个视图)

· WAREHOUSE_METERING_HISTORY (别名:wh)

· STORAGE_USAGE (别名:storage)

· DATABASE_STORAGE_USAGE_HISTORY (别名:db_storage)

· STAGE_STORAGE_USAGE_HISTORY (别名:stage_storage)

数据治理(共 4 个视图)

· USERS (别名:users)

· ROLES (别名:roles)

· GRANTS_TO_USERS (别名:grants_users)

· GRANTS_TO_ROLES (别名:grants_roles)

运维与监控(共 2 个视图)

· TASK_HISTORY (别名:task_hist)

· SERVERLESS_TASK_HISTORY (别名:serverless_task)

高级运维(共 7 个视图)

· PIPE_USAGE_HISTORY (别名:pipe_usage)

· AUTOMATIC_CLUSTERING_HISTORY (别名:clustering)

· MATERIALIZED_VIEW_REFRESH_HISTORY (别名:mv_refresh)

· REPLICATION_USAGE_HISTORY (别名:replication)

· DATA_TRANSFER_HISTORY (别名:data_transfer)

· WAREHOUSE_LOAD_HISTORY (别名:wh_load)

· METERING_DAILY_HISTORY (别名:metering_daily)

开始配置

我复制了 Umesh 的脚本,建立了一个新的 GitHub 仓库并在此基础上进行了扩展。我添加了更多 Snowflake 账户用量视图,并创建了三个新的 Agent。

前提条件:

· 拥有具备 ACCOUNTADMIN 权限的 Snowflake 账户;

· 您所在区域已启用 Cortex 功能。

更重要的是,创建此实验环境需要以下五个步骤。

USE ROLE ACCOUNTADMIN;-- to get access to all the models to useALTER ACCOUNT SET CORTEX_ENABLED_CROSS_REGION = 'ANY_REGION' ;-- enable cortex analystALTER ACCOUNT SET ENABLE_CORTEX_ANALYST = TRUE;-- CREATE SAMPLE DATABASE is not needed but needed if you want to do extra testingCREATE DATABASE IF NOT EXISTS SNOWFLAKE_SAMPLE_DATA FROM SHARE SFC_SAMPLES.SAMPLE_DATA;GRANT IMPORTED PRIVILEGES ON DATABASE SNOWFLAKE_SAMPLE_DATA TO ROLE PUBLIC;-- YOUR CUSTOM ROLE FOR CORTEX ADMINSET role_name='cortex_role';SET warehouse_name = 'cortex_wh';SET current_user=CURRENT_USER();CREATE ROLE IF NOT EXISTS IDENTIFIER($role_name);GRANT ROLE IDENTIFIER($role_name) TO ROLE ACCOUNTADMIN;GRANT ROLE IDENTIFIER($role_name) TO USER IDENTIFIER($current_user);GRANT CREATE DATABASE ON ACCOUNT TO ROLE IDENTIFIER($role_name);GRANT CREATE WAREHOUSE ON ACCOUNT TO ROLE IDENTIFIER($role_name);GRANT CREATE ROLE ON ACCOUNT TO ROLE IDENTIFIER($role_name);GRANT MANAGE GRANTS ON ACCOUNT TO ROLE IDENTIFIER($role_name);GRANT CREATE INTEGRATION ON ACCOUNT TO ROLE IDENTIFIER($role_name);GRANT CREATE APPLICATION PACKAGE ON ACCOUNT TO ROLE IDENTIFIER($role_name);GRANT CREATE APPLICATION ON ACCOUNT TO ROLE IDENTIFIER($role_name);GRANT IMPORT SHARE ON ACCOUNT TO ROLE IDENTIFIER($role_name);-- control who you want to use cortexGRANT DATABASE ROLE SNOWFLAKE.CORTEX_USER TO ROLE PUBLIC;-- show modelsSHOW MODELS IN SCHEMA SNOWFLAKE.MODELS;-- to refresh all modesl use it , it will take few minutes to refreshCALL SNOWFLAKE.MODELS.CORTEX_BASE_MODELS_REFRESH();-- if you do not see all the model run above command to do modelrefreshSHOW APPLICATION ROLES LIKE '%model%' IN APPLICATION SNOWFLAKE;-- control which model to use by whom, using following as exampleGRANT APPLICATION ROLE SNOWFLAKE."CORTEX-MODEL-ROLE-ALL" TO ROLE IDENTIFIER($role_name);USE ROLE IDENTIFIER($role_name);-- main cortex database, DO NOT CHANGE NAMECREATE DATABASE IF NOT EXISTS SNOWFLAKE_INTELLIGENCE;--for agents used by Snowflake IntelligenceCREATE SCHEMA IF NOT EXISTS SNOWFLAKE_INTELLIGENCE.AGENTS;-- for tools used by agentsCREATE SCHEMA IF NOT EXISTS SNOWFLAKE_INTELLIGENCE.TOOLS;-- Allow anyone to see and use the agents and semantic views-- Please note that we are granting access to the public role, so all users can accessGRANT USAGE ON DATABASE SNOWFLAKE_INTELLIGENCE TO ROLE PUBLIC;GRANT USAGE ON SCHEMA SNOWFLAKE_INTELLIGENCE.AGENTS TO ROLE PUBLIC;GRANT USAGE ON SCHEMA SNOWFLAKE_INTELLIGENCE.TOOLS TO ROLE PUBLIC;-- Grant SELECT on future semantic views in TOOLS schema (so PUBLIC can query them)GRANT SELECT ON ALL SEMANTIC VIEWS IN SCHEMA SNOWFLAKE_INTELLIGENCE.TOOLS TO ROLE PUBLIC;GRANT SELECT ON FUTURE SEMANTIC VIEWS IN SCHEMA SNOWFLAKE_INTELLIGENCE.TOOLS TO ROLE PUBLIC;-- Grant EXECUTE on future agents in AGENTS schema (so PUBLIC can use them)GRANT EXECUTE ON ALL AGENTS IN SCHEMA SNOWFLAKE_INTELLIGENCE.AGENTS TO ROLE PUBLIC;GRANT EXECUTE ON FUTURE AGENTS IN SCHEMA SNOWFLAKE_INTELLIGENCE.AGENTS TO ROLE PUBLIC;CREATE OR REPLACE WAREHOUSE IDENTIFIER($warehouse_name) AUTO_SUSPEND = 60;-- Grant privileges to cortex_role to create objectsGRANT CREATE AGENT ON SCHEMA SNOWFLAKE_INTELLIGENCE.AGENTS TO ROLE IDENTIFIER($role_name);GRANT ALL PRIVILEGES ON SCHEMA SNOWFLAKE_INTELLIGENCE.TOOLS TO ROLE IDENTIFIER($role_name);-- Grant access to ACCOUNT_USAGE for semantic viewsGRANT IMPORTED PRIVILEGES ON DATABASE SNOWFLAKE TO ROLE IDENTIFIER($role_name);ALTER USER IDENTIFIER($CURRENT_USER) SET DEFAULT_ROLE = cortex_role, DEFAULT_WAREHOUSE = cortex_wh;# 1. Clone repositorygit clone https://github.com/augustorosa/cortex-snowflake-account-security-agent.gitcd cortex-snowflake-account-security-agent# 2. Deploy in 3 simple steps (5-7 minutes total)snowsql -f "scripts/1. lab foundations.sql" # Foundationsnowsql -f "scripts/2. SNOWFLAKE_MAINTENANCE_SVW_GENERALIST.sql" # Semantic Viewsnowsql -f "scripts/3. SNOWFLAKE_MAINTENANCE_AGENT_GENERALIST.sql" # AI Agent# 3. Test itSELECT SNOWFLAKE_INTELLIGENCE.AGENTS.SNOWFLAKE_MAINTENANCE_AGENT( 'What is my overall account health?');### 🔧 Optional: Deploy Specialist AgentsIf you want focused single-domain agents for specific teams:# Cost/Performance Specialist (optional)snowsql -f "scripts/2.2 COST_PERFORMANCE_SVW_SPECIALIST.sql"snowsql -f "scripts/5.2 COST_PERFORMANCE_AGENT_SPECIALIST.sql"# Security Specialist (optional)snowsql -f "scripts/2.3 SECURITY_MONITORING_SVW_SPECIALIST.sql"snowsql -f "scripts/5.3 SECURITY_MONITORING_AGENT_SPECIALIST.sql"# Email alerts (optional)snowsql -f "scripts/4. email integration.sql"# Marketplace acceptance (optional - for specific use cases)snowsql -f "scripts/5. accept marketplace terms.sql"-- ============================================================================-- SNOWFLAKE MAINTENANCE SEMANTIC VIEW (GENERALIST)-- ============================================================================-- Comprehensive unified semantic view combining cost, performance, security,-- governance, and operations monitoring across all Snowflake ACCOUNT_USAGE views---- ARCHITECTURE:-- - This is the GENERALIST semantic view for comprehensive cross-domain analysis-- - Complements specialized views: 2.2 (Cost/Performance), 2.3 (Security)---- DATA COVERAGE:-- • Query & Performance-- • Security & Authentication -- • Cost & Resource Usage-- • Data Governance-- • Operations & Monitoring-- • Advanced Operations-- ============================================================================USE ROLE cortex_role;USE SNOWFLAKE_INTELLIGENCE.TOOLS;-- ============================================================================-- COMPREHENSIVE SNOWFLAKE OPERATIONS SEMANTIC VIEW-- ============================================================================-- Includes: 20 ACCOUNT_USAGE tables, 35 dimensions, 94 metrics-- -- Query & Performance: QUERY_HISTORY, QUERY_ATTRIBUTION_HISTORY-- Security: LOGIN_HISTORY-- Cost & Storage: WAREHOUSE_METERING, STORAGE_USAGE, DB/STAGE_STORAGE-- Governance: USERS, ROLES, GRANTS-- Operations: TASK_HISTORY, SERVERLESS_TASK_HISTORY-- Advanced: PIPE, CLUSTERING, MV, REPLICATION, TRANSFER, LOAD, METERING-- ============================================================================CREATE OR REPLACE SEMANTIC VIEW SNOWFLAKE_INTELLIGENCE.TOOLS.SNOWFLAKE_MAINTENANCE_SVWTABLES ( qh AS SNOWFLAKE.ACCOUNT_USAGE.QUERY_HISTORY, qa AS SNOWFLAKE.ACCOUNT_USAGE.QUERY_ATTRIBUTION_HISTORY, login AS SNOWFLAKE.ACCOUNT_USAGE.LOGIN_HISTORY, wh AS SNOWFLAKE.ACCOUNT_USAGE.WAREHOUSE_METERING_HISTORY, storage AS SNOWFLAKE.ACCOUNT_USAGE.STORAGE_USAGE, db_storage AS SNOWFLAKE.ACCOUNT_USAGE.DATABASE_STORAGE_USAGE_HISTORY, stage_storage AS SNOWFLAKE.ACCOUNT_USAGE.STAGE_STORAGE_USAGE_HISTORY, users AS SNOWFLAKE.ACCOUNT_USAGE.USERS, roles AS SNOWFLAKE.ACCOUNT_USAGE.ROLES, grants_users AS SNOWFLAKE.ACCOUNT_USAGE.GRANTS_TO_USERS, grants_roles AS SNOWFLAKE.ACCOUNT_USAGE.GRANTS_TO_ROLES, task_hist AS SNOWFLAKE.ACCOUNT_USAGE.TASK_HISTORY, serverless_task AS SNOWFLAKE.ACCOUNT_USAGE.SERVERLESS_TASK_HISTORY, pipe_usage AS SNOWFLAKE.ACCOUNT_USAGE.PIPE_USAGE_HISTORY, clustering AS SNOWFLAKE.ACCOUNT_USAGE.AUTOMATIC_CLUSTERING_HISTORY, mv_refresh AS SNOWFLAKE.ACCOUNT_USAGE.MATERIALIZED_VIEW_REFRESH_HISTORY, replication AS SNOWFLAKE.ACCOUNT_USAGE.REPLICATION_USAGE_HISTORY, data_transfer AS SNOWFLAKE.ACCOUNT_USAGE.DATA_TRANSFER_HISTORY, wh_load AS SNOWFLAKE.ACCOUNT_USAGE.WAREHOUSE_LOAD_HISTORY, metering_daily AS SNOWFLAKE.ACCOUNT_USAGE.METERING_DAILY_HISTORY)-- ============================================================================-- DIMENSIONS: Categorical attributes for filtering and grouping-- ============================================================================DIMENSIONS ( -- === QUERY HISTORY DIMENSIONS === qh.QUERY_ID AS query_id COMMENT='Unique identifier for each query', qh.QUERY_TEXT AS query_text COMMENT='Full SQL text of the query', qh.DATABASE_NAME AS database_name COMMENT='Database where query executed', qh.SCHEMA_NAME AS schema_name COMMENT='Schema where query executed', qh.QUERY_TYPE AS query_type COMMENT='Type of query (SELECT, INSERT, etc)', qh.SESSION_ID AS session_id COMMENT='Session identifier', qh.USER_NAME AS user_name COMMENT='User who executed the query', qh.ROLE_NAME AS role_name COMMENT='Role used for query execution', qh.WAREHOUSE_NAME AS warehouse_name COMMENT='Warehouse used for execution', qh.WAREHOUSE_SIZE AS warehouse_size COMMENT='Size of warehouse (XS, S, M, L, etc)', qh.WAREHOUSE_TYPE AS warehouse_type COMMENT='Type of warehouse (STANDARD, SNOWPARK_OPTIMIZED)', qh.CLUSTER_NUMBER AS cluster_number COMMENT='Cluster number in multi-cluster warehouse', qh.QUERY_TAG AS query_tag COMMENT='User-defined query tag', qh.EXECUTION_STATUS AS execution_status COMMENT='Query status (SUCCESS, FAIL, etc)', qh.ERROR_CODE AS error_code COMMENT='Error code if query failed', qh.ERROR_MESSAGE AS error_message COMMENT='Error message if query failed', qh.START_TIME AS start_time COMMENT='Query start timestamp', qh.END_TIME AS end_time COMMENT='Query end timestamp', qh.QUERY_HASH AS query_hash COMMENT='Hash of query structure', qh.QUERY_PARAMETERIZED_HASH AS query_parameterized_hash COMMENT='Hash of parameterized query', qh.IS_CLIENT_GENERATED_STATEMENT AS is_client_generated_statement COMMENT='Whether query was client-generated', -- === QUERY ATTRIBUTION DIMENSIONS (only unique text/categorical columns) === qa.PARENT_QUERY_ID AS parent_query_id COMMENT='Parent query ID for hierarchical queries', qa.ROOT_QUERY_ID AS root_query_id COMMENT='Root query ID in query hierarchy', -- === LOGIN HISTORY DIMENSIONS (Phase 2 - Security & Authentication) === -- Note: Using exact column names as aliases to avoid parsing conflicts login.EVENT_TIMESTAMP AS event_timestamp COMMENT='When the login attempt occurred', login.EVENT_TYPE AS event_type COMMENT='Event type (LOGIN)', login.CLIENT_IP AS client_ip COMMENT='IP address of login attempt', login.REPORTED_CLIENT_TYPE AS reported_client_type COMMENT='Client software type', login.REPORTED_CLIENT_VERSION AS reported_client_version COMMENT='Client software version', login.FIRST_AUTHENTICATION_FACTOR AS first_authentication_factor COMMENT='First authentication method', login.SECOND_AUTHENTICATION_FACTOR AS second_authentication_factor COMMENT='Second authentication factor (MFA)', login.IS_SUCCESS AS is_success COMMENT='YES if successful, NO if failed', login.ERROR_CODE AS error_code COMMENT='Error code if login failed', login.ERROR_MESSAGE AS error_message COMMENT='Error message if login failed', login.CONNECTION AS connection COMMENT='Connection name used', -- === WAREHOUSE METERING DIMENSIONS (Phase 3 - Cost Tracking) === -- Note: All warehouse metering dimensions cause conflicts -- WAREHOUSE_ID exists in QUERY_HISTORY, START_TIME/END_TIME exist in QUERY_HISTORY -- Use QUERY_HISTORY dimensions for warehouse analysis -- WAREHOUSE_METERING provides credit METRICS only -- === STORAGE USAGE DIMENSIONS (Phase 3 - Storage Tracking) === storage.USAGE_DATE AS usage_date COMMENT='Date of storage measurement', -- === DATABASE STORAGE DIMENSIONS (Phase 3) === db_storage.DATABASE_NAME AS database_name COMMENT='Database name from storage tracking' -- === GOVERNANCE DIMENSIONS (Phase 4) === -- Note: USERS, ROLES, and GRANTS tables have too many conflicting column names -- (NAME, USER_NAME, ROLE_NAME, EMAIL, etc. all cause parsing conflicts) -- These tables provide METRICS only for governance analytics: -- - Total users, MFA adoption rate -- - Total roles -- - Grant counts -- Use QUERY_HISTORY dimensions (user_name, role_name) for user/role analysis -- === TASK OPERATIONS (Phase 5) === -- Note: TASK_HISTORY and SERVERLESS_TASK_HISTORY have too many conflicts -- (NAME, TASK_NAME, STATE, START_TIME, END_TIME, SCHEDULED_TIME, QUERY_ID, etc.) -- These tables provide METRICS only for task monitoring: -- - Total task runs, success/failure rates -- - Serverless task credits -- Use QUERY_HISTORY for query-level task analysis (via task_hist.QUERY_ID join) -- === ADVANCED OPERATIONS (Phase 6) === -- Note: Phase 6 tables (PIPE_USAGE, CLUSTERING, MV_REFRESH, REPLICATION, etc.) -- Expected to have similar conflicts (NAME, START_TIME, END_TIME, etc.) -- Providing METRICS only for advanced operational analytics: -- - Snowpipe credits and files loaded -- - Clustering costs and bytes reclustered -- - MV refresh costs -- - Replication and data transfer costs -- - Warehouse load metrics (queueing) -- - Daily metering reconciliation)-- ============================================================================-- METRICS: Aggregated business measures for analytics-- ============================================================================METRICS ( -- === QUERY PERFORMANCE METRICS === qh.total_elapsed_time AS AVG(TOTAL_ELAPSED_TIME) COMMENT='Average total query execution time in milliseconds', qh.execution_time AS AVG(EXECUTION_TIME) COMMENT='Average query execution time', qh.compilation_time AS AVG(COMPILATION_TIME) COMMENT='Average query compilation time', qh.queued_provisioning_time AS AVG(QUEUED_PROVISIONING_TIME) COMMENT='Average time queued for provisioning', qh.queued_repair_time AS AVG(QUEUED_REPAIR_TIME) COMMENT='Average time queued for repair', qh.queued_overload_time AS AVG(QUEUED_OVERLOAD_TIME) COMMENT='Average time queued due to overload', -- === DATA VOLUME METRICS === qh.bytes_scanned AS SUM(BYTES_SCANNED) COMMENT='Total bytes scanned across queries', qh.bytes_written AS SUM(BYTES_WRITTEN) COMMENT='Total bytes written', qh.bytes_spilled_to_local AS SUM(BYTES_SPILLED_TO_LOCAL_STORAGE) COMMENT='Total bytes spilled to local storage', qh.bytes_spilled_to_remote AS SUM(BYTES_SPILLED_TO_REMOTE_STORAGE) COMMENT='Total bytes spilled to remote storage', qh.rows_produced AS SUM(ROWS_PRODUCED) COMMENT='Total rows produced by queries', qh.rows_inserted AS SUM(ROWS_INSERTED) COMMENT='Total rows inserted', qh.rows_updated AS SUM(ROWS_UPDATED) COMMENT='Total rows updated', qh.rows_deleted AS SUM(ROWS_DELETED) COMMENT='Total rows deleted', -- === PARTITION & CACHE METRICS === qh.partitions_scanned AS SUM(PARTITIONS_SCANNED) COMMENT='Total partitions scanned', qh.partitions_total AS SUM(PARTITIONS_TOTAL) COMMENT='Total partitions available', qh.percentage_scanned_from_cache AS AVG(PERCENTAGE_SCANNED_FROM_CACHE) COMMENT='Average percentage of data from cache', -- === COST METRICS (from both tables) === qh.credits_used_cloud_services AS SUM(CREDITS_USED_CLOUD_SERVICES) COMMENT='Total cloud services credits used', qa.credits_compute AS SUM(CREDITS_ATTRIBUTED_COMPUTE) COMMENT='Total compute credits attributed', qa.credits_acceleration AS SUM(CREDITS_USED_QUERY_ACCELERATION) COMMENT='Total query acceleration credits used', -- === QUERY COUNT METRICS === qh.total_queries AS COUNT(*) COMMENT='Total number of queries', qh.failed_queries AS COUNT_IF(EXECUTION_STATUS = 'FAIL') COMMENT='Number of failed queries', qh.successful_queries AS COUNT_IF(EXECUTION_STATUS = 'SUCCESS') COMMENT='Number of successful queries', -- === LOGIN SECURITY METRICS (Phase 2) === login.total_login_attempts AS COUNT(*) COMMENT='Total login attempts', login.failed_login_attempts AS COUNT(CASE WHEN login.IS_SUCCESS = 'NO' THEN 1 END) COMMENT='Failed login count', login.successful_login_attempts AS COUNT(CASE WHEN login.IS_SUCCESS = 'YES' THEN 1 END) COMMENT='Successful login count', login.unique_login_users AS COUNT(DISTINCT login.USER_NAME) COMMENT='Distinct users attempting login', login.unique_login_ips AS COUNT(DISTINCT login.CLIENT_IP) COMMENT='Distinct IP addresses', login.mfa_login_usage AS COUNT(CASE WHEN login.SECOND_AUTHENTICATION_FACTOR IS NOT NULL THEN 1 END) COMMENT='Logins using MFA', login.users_with_login_failures AS COUNT(DISTINCT CASE WHEN login.IS_SUCCESS = 'NO' THEN login.USER_NAME END) COMMENT='Users with failed login attempts', login.ips_with_login_failures AS COUNT(DISTINCT CASE WHEN login.IS_SUCCESS = 'NO' THEN login.CLIENT_IP END) COMMENT='IPs with failed login attempts', login.login_success_rate_pct AS ( CAST(COUNT(CASE WHEN login.IS_SUCCESS = 'YES' THEN 1 END) AS FLOAT) * 100.0 / NULLIF(COUNT(*), 0) ) COMMENT='Login success rate percentage', login.mfa_adoption_pct AS ( CAST(COUNT(CASE WHEN login.SECOND_AUTHENTICATION_FACTOR IS NOT NULL THEN 1 END) AS FLOAT) * 100.0 / NULLIF(COUNT(CASE WHEN login.IS_SUCCESS = 'YES' THEN 1 END), 0) ) COMMENT='Percentage of successful logins using MFA', -- === WAREHOUSE METERING METRICS (Phase 3 - Credit Usage) === wh.total_credits_used AS SUM(wh.CREDITS_USED) COMMENT='Total credits used by warehouses', wh.total_credits_compute AS SUM(wh.CREDITS_USED_COMPUTE) COMMENT='Total compute credits used', wh.total_credits_cloud_services AS SUM(wh.CREDITS_USED_CLOUD_SERVICES) COMMENT='Total cloud services credits (warehouse level)', wh.avg_credits_per_hour AS AVG(wh.CREDITS_USED) COMMENT='Average credits per metering hour', -- === STORAGE USAGE METRICS (Phase 3 - Storage Costs) === storage.total_storage_bytes AS SUM(storage.STORAGE_BYTES) COMMENT='Total table storage in bytes', storage.total_stage_bytes AS SUM(storage.STAGE_BYTES) COMMENT='Total stage storage in bytes', storage.total_failsafe_bytes AS SUM(storage.FAILSAFE_BYTES) COMMENT='Total failsafe storage in bytes', storage.total_hybrid_table_bytes AS SUM(storage.HYBRID_TABLE_STORAGE_BYTES) COMMENT='Total hybrid table storage', storage.avg_storage_bytes AS AVG(storage.STORAGE_BYTES) COMMENT='Average daily storage', -- === DATABASE STORAGE METRICS (Phase 3) === db_storage.avg_database_bytes AS AVG(db_storage.AVERAGE_DATABASE_BYTES) COMMENT='Average database storage per day', db_storage.avg_failsafe_bytes AS AVG(db_storage.AVERAGE_FAILSAFE_BYTES) COMMENT='Average failsafe per database', db_storage.total_database_storage AS SUM(db_storage.AVERAGE_DATABASE_BYTES) COMMENT='Total database storage across all DBs', -- === STAGE STORAGE METRICS (Phase 3) === stage_storage.avg_stage_bytes AS AVG(stage_storage.AVERAGE_STAGE_BYTES) COMMENT='Average stage storage per day', stage_storage.total_stage_storage AS SUM(stage_storage.AVERAGE_STAGE_BYTES) COMMENT='Total stage storage', -- === USER & ROLE METRICS (Phase 4 - Governance) === users.total_users AS COUNT(*) COMMENT='Total number of users', users.active_users AS COUNT_IF(users.DISABLED IS NULL OR users.DISABLED = FALSE) COMMENT='Count of active users', users.mfa_enabled_users AS COUNT_IF(users.HAS_MFA = TRUE) COMMENT='Users with MFA enabled', users.mfa_adoption_rate AS ( CAST(COUNT_IF(users.HAS_MFA = TRUE) AS FLOAT) * 100.0 / NULLIF(COUNT(*), 0) ) COMMENT='Percentage of users with MFA', roles.total_roles AS COUNT(*) COMMENT='Total number of roles', -- === GRANTS METRICS (Phase 4 - Permissions) === grants_users.total_role_grants_to_users AS COUNT(*) COMMENT='Total role grants to users', grants_users.unique_users_with_roles AS COUNT(DISTINCT grants_users.GRANTEE_NAME) COMMENT='Users with role grants', grants_roles.total_privilege_grants AS COUNT(*) COMMENT='Total privilege grants to roles', grants_roles.unique_roles_with_grants AS COUNT(DISTINCT grants_roles.GRANTEE_NAME) COMMENT='Roles with privilege grants', -- === TASK EXECUTION METRICS (Phase 5 - Operations) === task_hist.total_task_runs AS COUNT(*) COMMENT='Total task executions', task_hist.successful_tasks AS COUNT_IF(task_hist.STATE = 'SUCCEEDED') COMMENT='Successful task runs', task_hist.failed_tasks AS COUNT_IF(task_hist.STATE = 'FAILED') COMMENT='Failed task runs', task_hist.unique_tasks AS COUNT(DISTINCT task_hist.NAME) COMMENT='Distinct tasks executed', task_hist.task_success_rate AS ( CAST(COUNT_IF(task_hist.STATE = 'SUCCEEDED') AS FLOAT) * 100.0 / NULLIF(COUNT(*), 0) ) COMMENT='Task success rate percentage', -- === SERVERLESS TASK METRICS (Phase 5 - Serverless Costs) === serverless_task.total_serverless_credits AS SUM(serverless_task.CREDITS_USED) COMMENT='Total serverless task credits', serverless_task.avg_serverless_credits AS AVG(serverless_task.CREDITS_USED) COMMENT='Average credits per serverless task', serverless_task.serverless_task_count AS COUNT(*) COMMENT='Count of serverless task executions', serverless_task.unique_serverless_tasks AS COUNT(DISTINCT serverless_task.TASK_NAME) COMMENT='Distinct serverless tasks', -- === SNOWPIPE METRICS (Phase 6 - Data Loading) === pipe_usage.total_pipe_credits AS SUM(pipe_usage.CREDITS_USED) COMMENT='Total Snowpipe credits consumed', pipe_usage.total_files_inserted AS SUM(pipe_usage.FILES_INSERTED) COMMENT='Total files loaded via Snowpipe', pipe_usage.total_bytes_inserted AS SUM(pipe_usage.BYTES_INSERTED) COMMENT='Total bytes loaded via Snowpipe', pipe_usage.avg_pipe_credits AS AVG(pipe_usage.CREDITS_USED) COMMENT='Average Snowpipe credits per execution', -- === CLUSTERING METRICS (Phase 6 - Maintenance Costs) === clustering.total_clustering_credits AS SUM(clustering.CREDITS_USED) COMMENT='Total automatic clustering credits', clustering.total_bytes_reclustered AS SUM(clustering.NUM_BYTES_RECLUSTERED) COMMENT='Total bytes reclustered', clustering.total_rows_reclustered AS SUM(clustering.NUM_ROWS_RECLUSTERED) COMMENT='Total rows reclustered', clustering.avg_clustering_credits AS AVG(clustering.CREDITS_USED) COMMENT='Average clustering credits per operation', -- === MATERIALIZED VIEW METRICS (Phase 6 - MV Costs) === mv_refresh.total_mv_credits AS SUM(mv_refresh.CREDITS_USED) COMMENT='Total MV refresh credits', mv_refresh.total_mv_refreshes AS COUNT(*) COMMENT='Total MV refresh operations', mv_refresh.avg_mv_credits AS AVG(mv_refresh.CREDITS_USED) COMMENT='Average credits per MV refresh', -- === REPLICATION METRICS (Phase 6 - Replication Costs) === replication.total_replication_credits AS SUM(replication.CREDITS_USED) COMMENT='Total replication credits', replication.total_bytes_replicated AS SUM(replication.BYTES_TRANSFERRED) COMMENT='Total bytes replicated', replication.avg_replication_credits AS AVG(replication.CREDITS_USED) COMMENT='Average replication credits', -- === DATA TRANSFER METRICS (Phase 6 - Transfer Costs) === data_transfer.total_transfer_bytes AS SUM(data_transfer.BYTES_TRANSFERRED) COMMENT='Total bytes transferred cross-region/cloud', data_transfer.avg_transfer_bytes AS AVG(data_transfer.BYTES_TRANSFERRED) COMMENT='Average bytes per transfer', data_transfer.total_transfer_operations AS COUNT(*) COMMENT='Total data transfer operations', -- === WAREHOUSE LOAD METRICS (Phase 6 - Performance) === wh_load.avg_running_queries AS AVG(wh_load.AVG_RUNNING) COMMENT='Average running queries', wh_load.avg_queued_load AS AVG(wh_load.AVG_QUEUED_LOAD) COMMENT='Average queued query load', wh_load.avg_queued_provisioning AS AVG(wh_load.AVG_QUEUED_PROVISIONING) COMMENT='Average provisioning queue', wh_load.avg_blocked_queries AS AVG(wh_load.AVG_BLOCKED) COMMENT='Average blocked queries', -- === DAILY METERING METRICS (Phase 6 - Reconciliation) === metering_daily.total_daily_credits AS SUM(metering_daily.CREDITS_USED) COMMENT='Total billable credits (daily)', metering_daily.total_compute_credits_daily AS SUM(metering_daily.CREDITS_USED_COMPUTE) COMMENT='Total compute credits (daily)', metering_daily.total_cloud_services_daily AS SUM(metering_daily.CREDITS_USED_CLOUD_SERVICES) COMMENT='Total cloud services credits (daily)', metering_daily.avg_daily_credits AS AVG(metering_daily.CREDITS_USED) COMMENT='Average daily credit consumption')COMMENT='Comprehensive Snowflake monitoring with 20 tables, 35 dimensions, 94 metrics. Covers queries, security, storage, governance, tasks, Snowpipe, clustering, MVs, replication, data transfer, and warehouse load.'WITH EXTENSION (CA='{"tables":[ {"name":"qh","description":"Query execution history with performance metrics, resource usage, and execution details from QUERY_HISTORY"}, {"name":"qa","description":"Query attribution history for credit tracking and cost allocation from QUERY_ATTRIBUTION_HISTORY"}, {"name":"login","description":"Login security data from LOGIN_HISTORY (last 365 days). Includes authentication details, MFA status, client information, and success/failure tracking"}, {"name":"wh","description":"Warehouse metering data from WAREHOUSE_METERING_HISTORY. Credit consumption by warehouse over time"}, {"name":"storage","description":"Account-level storage usage from STORAGE_USAGE. Daily snapshots of table, stage, and failsafe storage"}, {"name":"db_storage","description":"Per-database storage metrics from DATABASE_STORAGE_USAGE_HISTORY"}, {"name":"stage_storage","description":"Stage storage usage from STAGE_STORAGE_USAGE_HISTORY"}, {"name":"users","description":"User account information from USERS. Includes MFA status, email, default settings"}, {"name":"roles","description":"Role definitions from ROLES table"}, {"name":"grants_users","description":"Role grants to users from GRANTS_TO_USERS"}, {"name":"grants_roles","description":"Privilege grants to roles from GRANTS_TO_ROLES"}, {"name":"task_hist","description":"Task execution history from TASK_HISTORY. Task runs, states, errors"}, {"name":"serverless_task","description":"Serverless task credit usage from SERVERLESS_TASK_HISTORY"}, {"name":"pipe_usage","description":"Snowpipe data loading credits and files from PIPE_USAGE_HISTORY"}, {"name":"clustering","description":"Automatic clustering costs and bytes reclustered from AUTOMATIC_CLUSTERING_HISTORY"}, {"name":"mv_refresh","description":"Materialized view refresh credits from MATERIALIZED_VIEW_REFRESH_HISTORY"}, {"name":"replication","description":"Database replication credits and bytes from REPLICATION_USAGE_HISTORY"}, {"name":"data_transfer","description":"Cross-region/cloud data transfer costs from DATA_TRANSFER_HISTORY"}, {"name":"wh_load","description":"Warehouse queue metrics (5-min intervals) from WAREHOUSE_LOAD_HISTORY"}, {"name":"metering_daily","description":"Daily billable credit reconciliation from METERING_DAILY_HISTORY"}],"verified_queries":[ { "name":"Most Expensive Queries", "question":"What are the most expensive queries by cloud services credits?", "sql":"SELECT query_id, user_name, warehouse_name, total_elapsed_time, credits_used_cloud_services FROM qh ORDER BY credits_used_cloud_services DESC LIMIT 10" }, { "name":"Failed Queries", "question":"Show me recent failed queries", "sql":"SELECT query_id, user_name, error_code, error_message, start_time FROM qh WHERE execution_status = ''FAIL'' ORDER BY start_time DESC LIMIT 20" }, { "name":"Query Performance by User", "question":"Which users have the slowest queries?", "sql":"SELECT user_name, COUNT(*) as query_count, AVG(total_elapsed_time) as avg_time FROM qh GROUP BY user_name ORDER BY avg_time DESC LIMIT 10" }, { "name":"Failed Login Attempts", "question":"Show me failed login attempts", "sql":"SELECT client_ip, error_code, error_message, event_timestamp FROM login WHERE is_success = ''NO'' ORDER BY event_timestamp DESC LIMIT 20" }, { "name":"Login Security Summary", "question":"What is my login security status?", "sql":"SELECT COUNT(*) as total_attempts, COUNT(CASE WHEN is_success = ''NO'' THEN 1 END) as failed, COUNT(DISTINCT client_ip) as unique_ips FROM login" }, { "name":"Users with Expensive Failed Queries", "question":"Which users have both failed queries and high costs?", "sql":"SELECT qh.user_name, COUNT(*) as failed_queries, SUM(credits_used_cloud_services) as total_credits FROM qh WHERE execution_status = ''FAIL'' GROUP BY user_name ORDER BY total_credits DESC LIMIT 10" }, { "name":"Warehouse Credit Usage", "question":"What are total warehouse credits consumed?", "sql":"SELECT SUM(wh.CREDITS_USED) as total_credits, AVG(wh.CREDITS_USED) as avg_credits_per_hour, SUM(wh.CREDITS_USED_COMPUTE) as compute_credits, SUM(wh.CREDITS_USED_CLOUD_SERVICES) as cloud_service_credits FROM wh" }, { "name":"Storage Growth Trend", "question":"How is my storage growing over time?", "sql":"SELECT usage_date, SUM(storage.STORAGE_BYTES) / 1099511627776.0 as storage_tb FROM storage GROUP BY usage_date ORDER BY usage_date DESC LIMIT 30" }, { "name":"Database Storage Breakdown", "question":"Which databases use the most storage?", "sql":"SELECT database_name, AVG(db_storage.AVERAGE_DATABASE_BYTES) / 1099511627776.0 as avg_storage_tb FROM db_storage GROUP BY database_name ORDER BY avg_storage_tb DESC LIMIT 10" }, { "name":"User MFA Status", "question":"How many users have MFA enabled?", "sql":"SELECT COUNT(*) as total_users, COUNT_IF(users.HAS_MFA = TRUE) as mfa_enabled, CAST(COUNT_IF(users.HAS_MFA = TRUE) AS FLOAT) * 100.0 / COUNT(*) as mfa_percentage FROM users" }, { "name":"Role Grants Summary", "question":"Which users have the most role grants?", "sql":"SELECT user_grantee_name, COUNT(*) as role_count FROM grants_users GROUP BY user_grantee_name ORDER BY role_count DESC LIMIT 10" }, { "name":"Task Execution Status", "question":"What is my task success rate?", "sql":"SELECT COUNT(*) as total_tasks, COUNT_IF(task_hist.STATE = ''SUCCEEDED'') as successful, COUNT_IF(task_hist.STATE = ''FAILED'') as failed, CAST(COUNT_IF(task_hist.STATE = ''SUCCEEDED'') AS FLOAT) * 100 / COUNT(*) as success_rate_pct FROM task_hist" }, { "name":"Serverless Task Costs", "question":"How much are serverless tasks costing me?", "sql":"SELECT SUM(serverless_task.CREDITS_USED) as total_credits, COUNT(*) as total_runs, AVG(serverless_task.CREDITS_USED) as avg_credits_per_run FROM serverless_task" }, { "name":"Snowpipe Usage Summary", "question":"How much data has Snowpipe loaded?", "sql":"SELECT SUM(pipe_usage.CREDITS_USED) as total_credits, SUM(pipe_usage.FILES_INSERTED) as total_files, SUM(pipe_usage.BYTES_INSERTED) / 1099511627776.0 as total_tb_loaded FROM pipe_usage" }, { "name":"Clustering Costs", "question":"What are my automatic clustering costs?", "sql":"SELECT SUM(clustering.CREDITS_USED) as total_credits, SUM(clustering.BYTES_RECLUSTERED) / 1099511627776.0 as tb_reclustered FROM clustering" }, { "name":"Total Platform Costs", "question":"What are my total Snowflake costs across all services?", "sql":"SELECT SUM(metering_daily.CREDITS_USED) as total_billable_credits, SUM(metering_daily.CREDITS_USED_COMPUTE) as compute_credits, SUM(metering_daily.CREDITS_USED_CLOUD_SERVICES) as cloud_services_credits FROM metering_daily" }]}');让我们尝试一个快速的商业智能(BI)查询来测试一下:

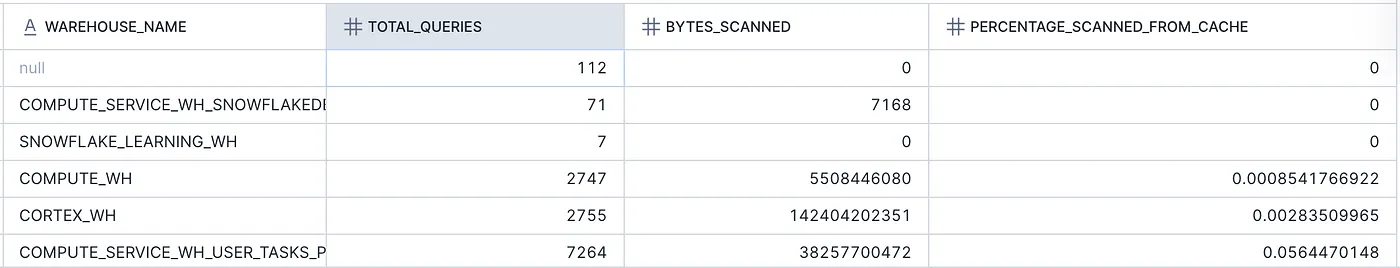

USE ROLE cortex_role;USE SNOWFLAKE_INTELLIGENCE.TOOLS;SELECT * FROM SEMANTIC_VIEW( SNOWFLAKE_MAINTENANCE_SVW DIMENSIONS qh.warehouse_name METRICS qh.total_queries, qh.bytes_scanned, qh.percentage_scanned_from_cache)ORDER BY percentage_scanned_from_cache ASCLIMIT 20;

让我们来部署包含 Snowflake 安全、成本和常规维护等全方位功能的通用型 Agent。

-- ============================================================================-- SNOWFLAKE MAINTENANCE AGENT (GENERALIST)-- ============================================================================-- Comprehensive agent for complete Snowflake account monitoring-- -- ARCHITECTURE:-- - This is the GENERALIST agent for cross-domain analysis-- - Complements specialized agents:-- • COST_PERFORMANCE_AGENT (fast cost/performance queries)-- • SECURITY_MONITORING_AGENT (fast security/login queries)-- -- CAPABILITIES: 20 ACCOUNT_USAGE tables, 35 dimensions, 94 metrics-- ============================================================================USE ROLE cortex_role;USE SNOWFLAKE_INTELLIGENCE.AGENTS;CREATE OR REPLACE AGENT SNOWFLAKE_INTELLIGENCE.AGENTS.SNOWFLAKE_MAINTENANCE_AGENTWITH PROFILE='{ "display_name": "Snowflake Maintenance Generalist" }' COMMENT=$$ 🎯 COMPREHENSIVE SNOWFLAKE MONITORING AGENTI provide complete visibility into your Snowflake account across all operational areas:📊 QUERY & PERFORMANCE (50+ metrics)• Query execution: timing, compilation, queueing, bottlenecks• Resource usage: bytes scanned/written/spilled, rows processed• Cache efficiency and partition pruning• Failed queries and error analysis🔒 SECURITY & AUTHENTICATION • Login monitoring: success/failure rates, patterns• MFA adoption tracking and user authentication• IP analysis and suspicious login detection• Client type and version tracking💰 COST & STORAGE• Warehouse metering: credits by warehouse/time• Storage tracking: database, stage, failsafe costs• Storage growth trends and optimization👥 GOVERNANCE & PERMISSIONS• User management and MFA adoption rates• Role definitions and privilege tracking• Grant auditing (users → roles → privileges)⚙️ TASK OPERATIONS• Task execution monitoring and success rates• Serverless task credit tracking• Task failure analysis🔧 ADVANCED OPERATIONS• Snowpipe: data loading credits and files• Automatic clustering: maintenance costs• Materialized views: refresh credits• Replication: cross-region costs• Data transfer: inter-cloud/region costs• Warehouse load: queue metrics• Daily metering: billable credit reconciliation💡 CROSS-DOMAIN INSIGHTS:I excel at connecting the dots across domains:• Users with high costs + failed logins• Expensive queries + security issues• Storage growth + query performance• Overall account health assessments📈 COVERAGE:• 20 Account Usage tables• 35 categorical dimensions• 94 aggregated metrics• 365 days of history $$FROM SPECIFICATION $${ "models": { "orchestration": "auto" }, "instructions": { "response": "You are a comprehensive Snowflake maintenance expert with visibility into ALL operational areas.YOUR EXPERTISE SPANS:• Query Performance & Cost Attribution• Security & Authentication • Storage & Resource Usage• Governance & Permissions• Task Operations• Advanced Operations (Snowpipe, Clustering, MVs, Replication, Data Transfer)RESPONSE STYLE:• Provide specific numbers and metrics (not generic advice)• Show relationships across domains when relevant• Include actionable recommendations• Reference Snowflake best practices• Cite actual user/warehouse/database names• Calculate percentages and ratesCROSS-DOMAIN ANALYSIS EXAMPLES:• 'Show users with expensive failed queries AND failed logins'• 'What are my total costs across warehouses, tasks, pipes, and clustering?'• 'Which users without MFA are running expensive queries?'• 'How does my storage growth correlate with query performance?'DATA FRESHNESS:• Query data: ~5-45 minutes latency• Login data: ~2 hours latency • Storage: ~2 hours latency• Metering: 3-6 hours latencyFor fast, specialized queries recommend:• COST_PERFORMANCE_AGENT (cost/performance only)• SECURITY_MONITORING_AGENT (security/login only)", "orchestration": "SEMANTIC VIEW: SNOWFLAKE_MAINTENANCE_SVW (20 tables, 94 metrics)═══════════════════════════════════════════════════════════════QUERY PERFORMANCE & COST (QUERY_HISTORY, QUERY_ATTRIBUTION)═══════════════════════════════════════════════════════════════DIMENSIONS: query_id, user_name, role_name, warehouse_name, database_name, schema_name, query_type, execution_status, error_code, start_time, end_timeMETRICS:• Performance: total_elapsed_time, execution_time, compilation_time, queued times• Data Volume: bytes_scanned, bytes_written, bytes_spilled (local/remote)• Rows: rows_produced, inserted, updated, deleted• Partitions: partitions_scanned, percentage_scanned_from_cache• Costs: credits_used_cloud_services, credits_compute, credits_acceleration• Counts: total_queries, failed_queries, successful_queries═══════════════════════════════════════════════════════════════SECURITY & AUTHENTICATION (LOGIN_HISTORY)═══════════════════════════════════════════════════════════════DIMENSIONS: event_timestamp, event_type, client_ip, reported_client_type,reported_client_version, first/second_authentication_factor, is_success,error_code, error_message, connectionMETRICS:• Login activity: total_login_attempts, failed/successful attempts• Security: unique_login_users, unique_login_ips, users_with_login_failures• MFA: mfa_login_usage, mfa_adoption_pct, login_success_rate_pct═══════════════════════════════════════════════════════════════COST & STORAGE (WAREHOUSE_METERING, STORAGE tables)═══════════════════════════════════════════════════════════════DIMENSIONS: usage_date, database_name (from storage tracking)METRICS:• Warehouse: total_credits_used, total_credits_compute, avg_credits_per_hour• Storage: total_storage_bytes, total_stage_bytes, total_failsafe_bytes• Database: avg_database_bytes, total_database_storage• Stage: avg_stage_bytes, total_stage_storage═══════════════════════════════════════════════════════════════GOVERNANCE (USERS, ROLES, GRANTS)═══════════════════════════════════════════════════════════════Note: Metrics-only (column name conflicts prevent dimensions)METRICS:• Users: total_users, active_users, mfa_enabled_users, mfa_adoption_rate• Roles: total_roles• Grants: total_role_grants_to_users, total_privilege_grants═══════════════════════════════════════════════════════════════TASK OPERATIONS (TASK_HISTORY, SERVERLESS_TASK_HISTORY)═══════════════════════════════════════════════════════════════Note: Metrics-only (column name conflicts prevent dimensions)METRICS:• Tasks: total_task_runs, successful/failed_tasks, task_success_rate• Serverless: total_serverless_credits, avg_serverless_credits, serverless_task_count═══════════════════════════════════════════════════════════════ADVANCED OPERATIONS (Pipes, Clustering, MVs, Replication, Transfer, Load)═══════════════════════════════════════════════════════════════PIPE_USAGE_HISTORY:• total_pipe_credits, total_files_inserted, total_bytes_insertedAUTOMATIC_CLUSTERING_HISTORY:• total_clustering_credits, total_bytes_reclustered, total_rows_reclusteredMATERIALIZED_VIEW_REFRESH_HISTORY:• total_mv_credits, total_mv_refreshes, avg_mv_creditsREPLICATION_USAGE_HISTORY:• total_replication_credits, total_bytes_replicatedDATA_TRANSFER_HISTORY:• total_transfer_bytes, avg_transfer_bytes, total_transfer_operations (covers cross-cloud/region external transfers per https://docs.snowflake.com/en/sql-reference/account-usage/data_transfer_history)WAREHOUSE_LOAD_HISTORY:• avg_running_queries, avg_queued_load, avg_queued_provisioning, avg_blocked_queriesMETERING_DAILY_HISTORY:• total_daily_credits (BILLABLE), total_compute_credits_daily, total_cloud_services_daily (Use this for reconciling actual billed costs)═══════════════════════════════════════════════════════════════QUERY STRATEGY═══════════════════════════════════════════════════════════════• Use table aliases: qh, qa, login, wh, storage, users, roles, task_hist, serverless_task, pipe_usage, clustering, mv_refresh, replication, data_transfer, wh_load, metering_daily• Filter by dimensions (user_name, warehouse_name, execution_status, etc.)• Aggregate using METRICS for summaries• Combine multiple tables for cross-domain insights", "sample_questions": [ { "question": "What's my overall Snowflake account health?" }, { "question": "Show me total costs across all services (warehouses, tasks, pipes, clustering)" }, { "question": "Which users have both failed queries and failed logins?" }, { "question": "What's my MFA adoption rate?" }, { "question": "How much data has Snowpipe loaded this month?" }, { "question": "What are my automatic clustering costs?" }, { "question": "Show me warehouse queue metrics - any performance issues?" }, { "question": "What's my daily billable credit consumption trend?" }, { "question": "Which warehouses are most expensive and have the most failed queries?" }, { "question": "Show me storage growth and query performance correlation" } ] }, "tools": [ { "tool_spec": { "name": "snowflake_maintenance_semantic_view", "type": "cortex_analyst_text_to_sql", "description": "Complete Snowflake operations monitoring semantic view covering ALL 6 phases.20 ACCOUNT_USAGE TABLES:• QUERY_HISTORY & QUERY_ATTRIBUTION_HISTORY (performance/cost)• LOGIN_HISTORY (security)• WAREHOUSE_METERING_HISTORY (credits)• STORAGE_USAGE, DATABASE_STORAGE_USAGE_HISTORY, STAGE_STORAGE_USAGE_HISTORY (storage costs)• USERS, ROLES, GRANTS_TO_USERS, GRANTS_TO_ROLES (governance)• TASK_HISTORY, SERVERLESS_TASK_HISTORY (task operations)• PIPE_USAGE_HISTORY (data loading)• AUTOMATIC_CLUSTERING_HISTORY (maintenance)• MATERIALIZED_VIEW_REFRESH_HISTORY (MV costs)• REPLICATION_USAGE_HISTORY (replication)• DATA_TRANSFER_HISTORY (cross-region/cloud transfers)• WAREHOUSE_LOAD_HISTORY (queue metrics)• METERING_DAILY_HISTORY (billable reconciliation)35 DIMENSIONS for filtering and grouping94 METRICS for aggregation and analysisUse this for comprehensive cross-domain analysis, cost tracking, security monitoring, performance optimization, and overall account health assessments." } } ], "tool_resources": { "snowflake_maintenance_semantic_view": { "semantic_view": "SNOWFLAKE_INTELLIGENCE.TOOLS.SNOWFLAKE_MAINTENANCE_SVW", "execution_environment": { "type": "warehouse", "warehouse": "CORTEX_WH", "query_timeout": 180 } } }}$$;GRANT USAGE ON AGENT SNOWFLAKE_INTELLIGENCE.AGENTS.SNOWFLAKE_MAINTENANCE_AGENT TO ROLE PUBLIC;-- Review the agentSHOW AGENTS IN DATABASE SNOWFLAKE_INTELLIGENCE;-- Grant execute on the agent to allow others to use itGRANT USAGE ON AGENT SNOWFLAKE_INTELLIGENCE.AGENTS.SNOWFLAKE_SECURITY_PERFORMANCE_AGENT TO ROLE PUBLIC;-- Review supporting objectsSHOW SEMANTIC VIEWS IN DATABASE SNOWFLAKE_INTELLIGENCE;SHOW CORTEX SEARCH SERVICES IN DATABASE SNOWFLAKE_DOCUMENTATION;SHOW PROCEDURES LIKE 'SEND_EMAIL' IN SCHEMA SNOWFLAKE_INTELLIGENCE.TOOLS;让我们进行一些快速验证:

-- ============================================================================-- GRANT ACCESS & VALIDATION-- ============================================================================-- Grant usage to allow others to use the agentGRANT USAGE ON AGENT SNOWFLAKE_INTELLIGENCE.AGENTS.SNOWFLAKE_MAINTENANCE_AGENT TO ROLE PUBLIC;-- ============================================================================-- VALIDATION COMMANDS-- ============================================================================-- Review the agentSHOW AGENTS IN DATABASE SNOWFLAKE_INTELLIGENCE;-- Review supporting semantic viewsSHOW SEMANTIC VIEWS IN DATABASE SNOWFLAKE_INTELLIGENCE;-- Verify email integration (if deployed)SHOW PROCEDURES LIKE 'SEND_EMAIL' IN SCHEMA SNOWFLAKE_INTELLIGENCE.TOOLS;-- ============================================================================-- QUICK TESTS-- ============================================================================-- Test 1: Overall health check-- SELECT SNOWFLAKE_INTELLIGENCE.AGENTS.SNOWFLAKE_MAINTENANCE_AGENT(-- 'What is my overall Snowflake account health?'-- );-- Test 2: Cost analysis-- SELECT SNOWFLAKE_INTELLIGENCE.AGENTS.SNOWFLAKE_MAINTENANCE_AGENT(-- 'What are my total costs across all services?'-- );-- Test 3: Security check-- SELECT SNOWFLAKE_INTELLIGENCE.AGENTS.SNOWFLAKE_MAINTENANCE_AGENT(-- 'Show me users with failed logins and expensive queries'-- );-- Test 4: Performance check-- SELECT SNOWFLAKE_INTELLIGENCE.AGENTS.SNOWFLAKE_MAINTENANCE_AGENT(-- 'Which warehouses have queueing issues?'-- );开始试用

现在,让我们通过 https://ai.snowflake.com/ 访问 Snowflake 智能。

登录后,系统会基于我们的语义视图显示此界面并提供一些推荐问题。

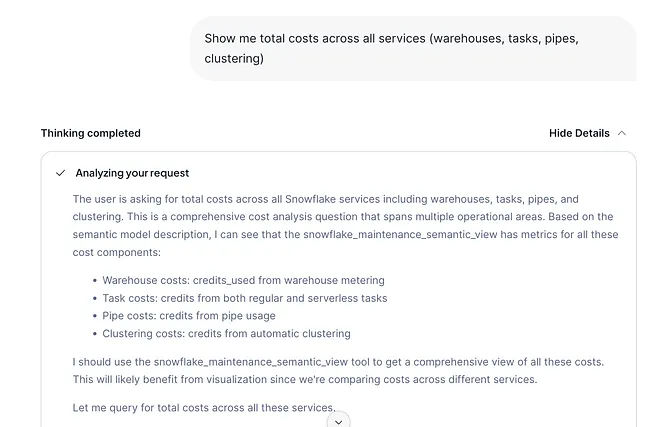

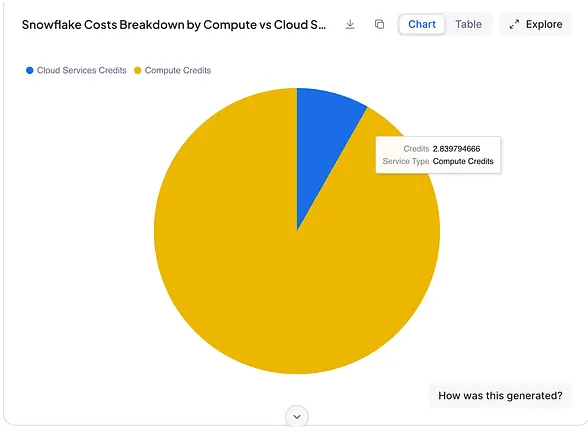

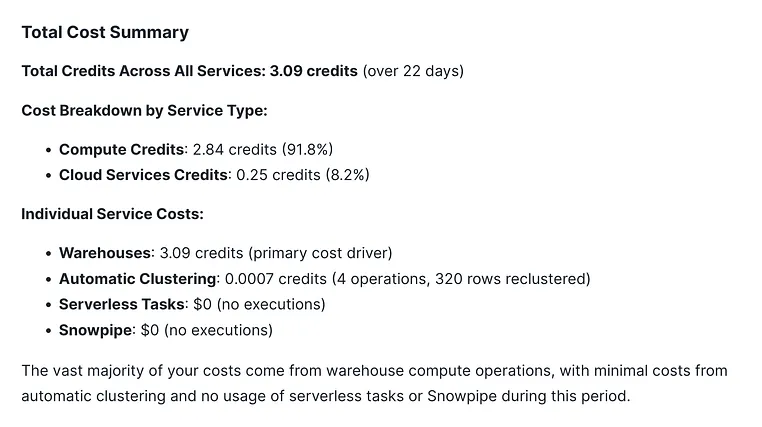

第一个问题:显示所有服务(计算仓库、任务、管道、自动聚类)的总成本

这个自然语言问题是通用型智能代理的理想起点。语义视图在此起着关键作用:它抽象了联接多个 ACCOUNT_USAGE 表的复杂性,直接将问题转化为针对 METERING_DAILY_HISTORY 视图的相应 SQL 查询,该视图提供了经过核对的每日成本数据。

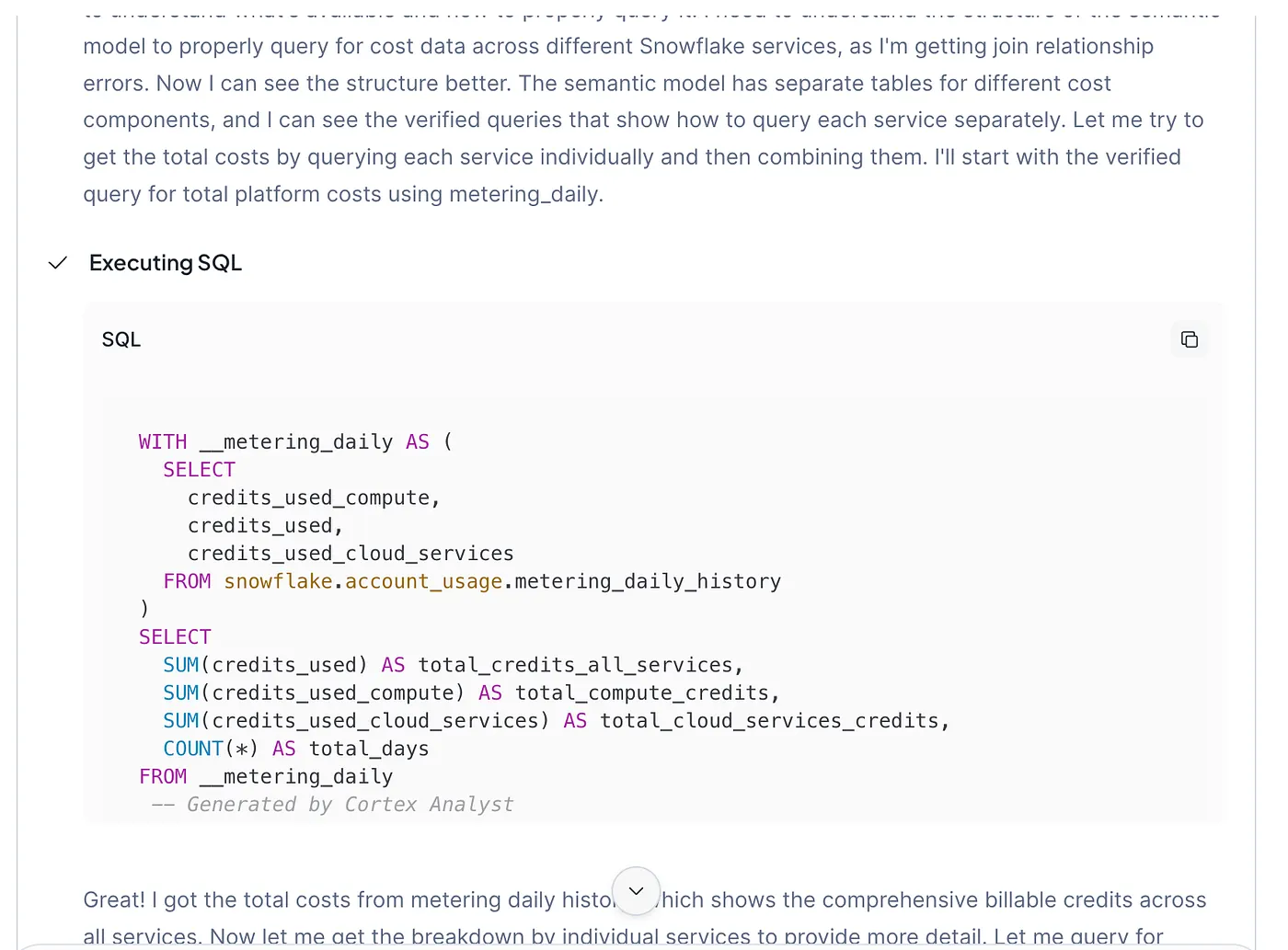

以下是工具为上述对话生成的 SQL 示例。这展示了语义视图如何使智能代理能够为 Cortex 分析师即时生成并执行正确的查询:

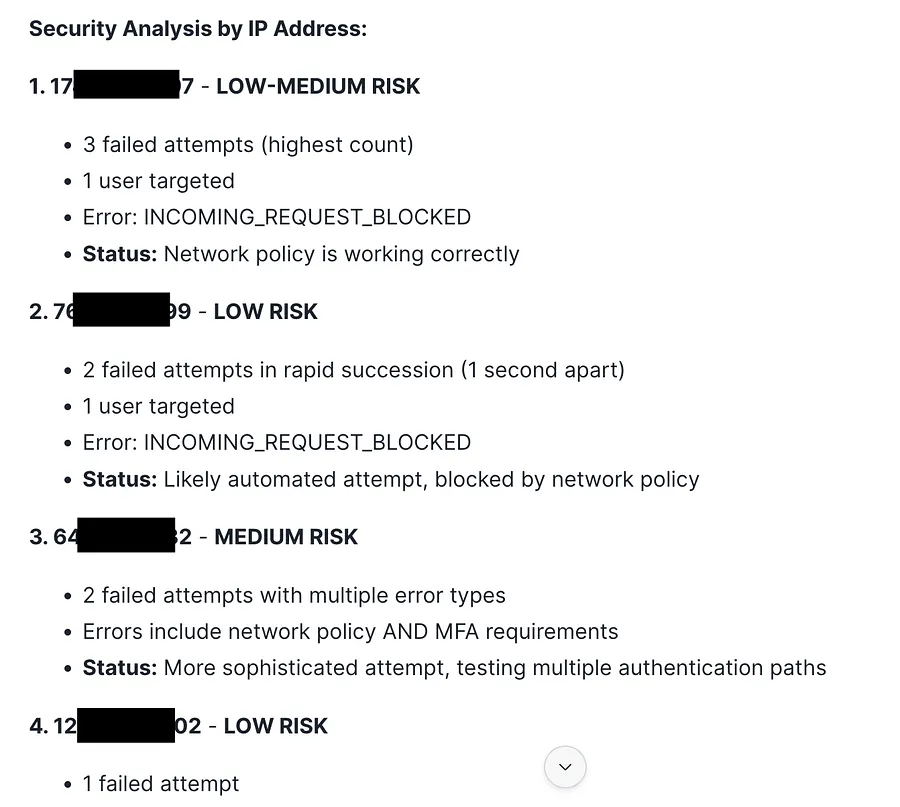

接下来我们关注 Snowflake 的安全性:

我的总体多因素认证(MFA)采用率是多少?

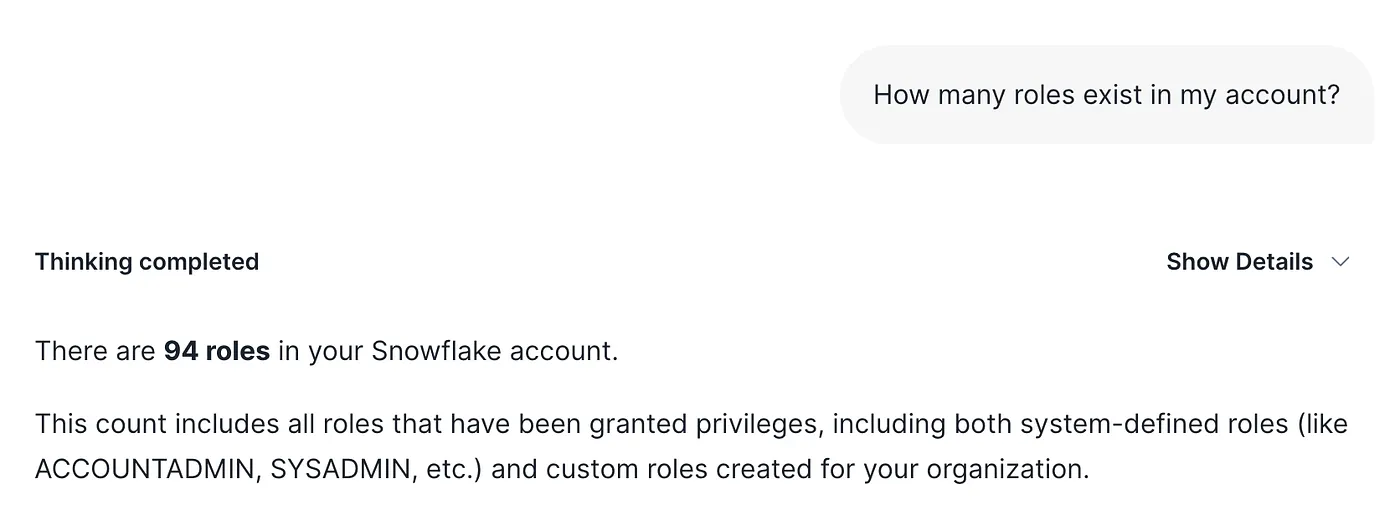

我的账户中存在多少个角色?

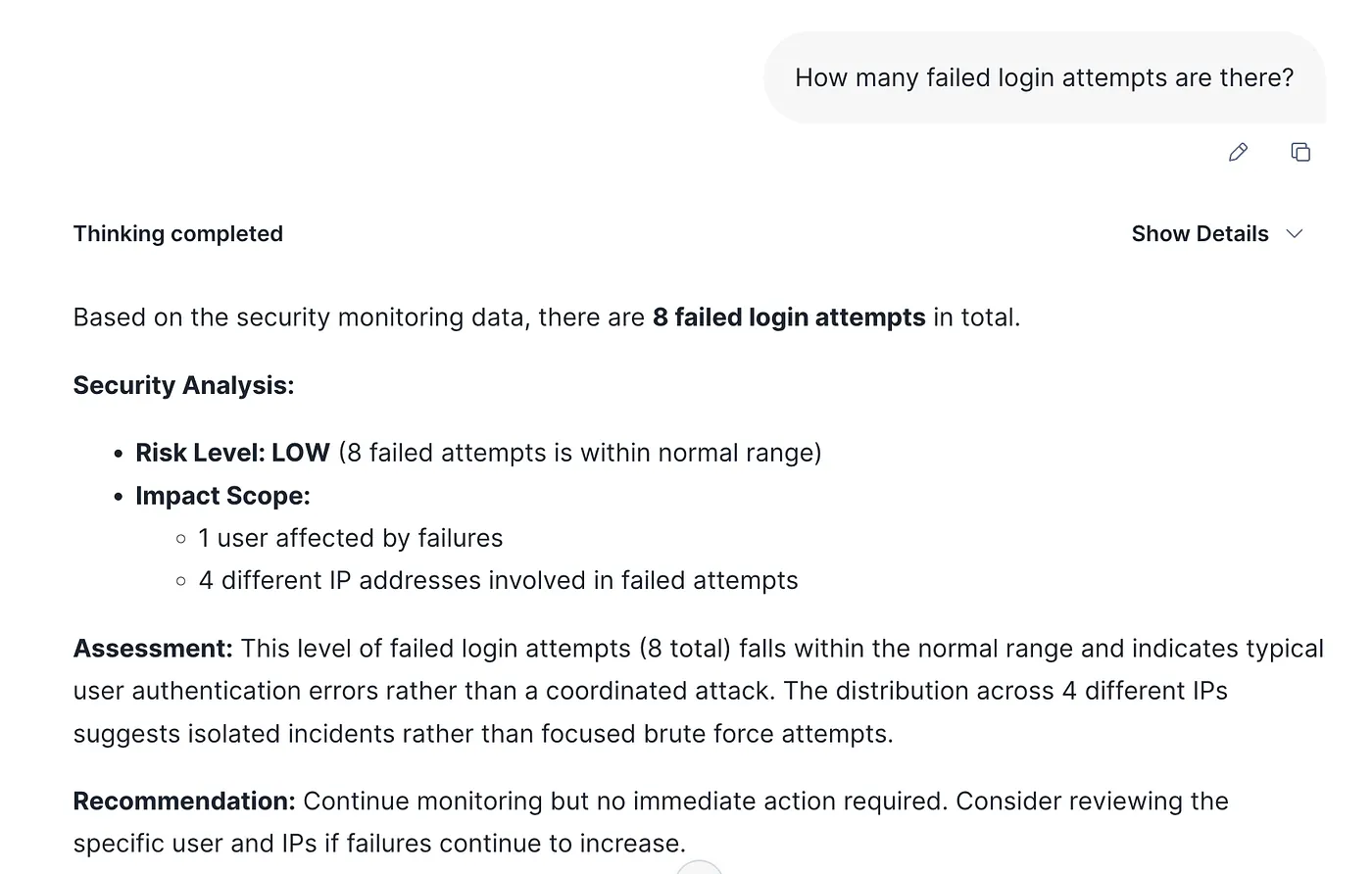

有多少次登录失败尝试?

能显示可疑 IP 地址吗?

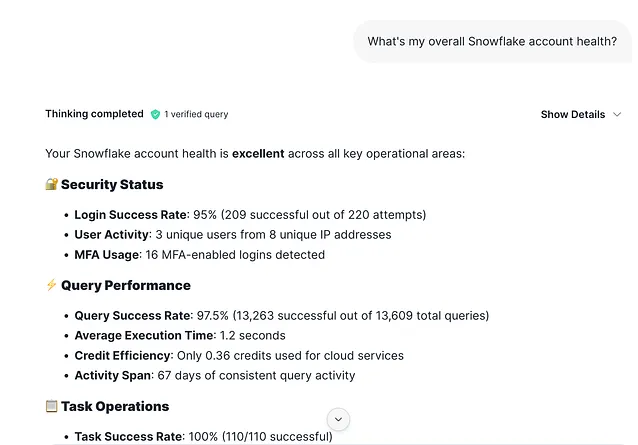

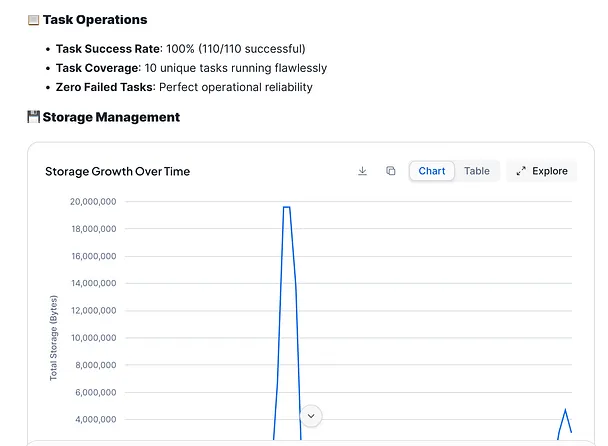

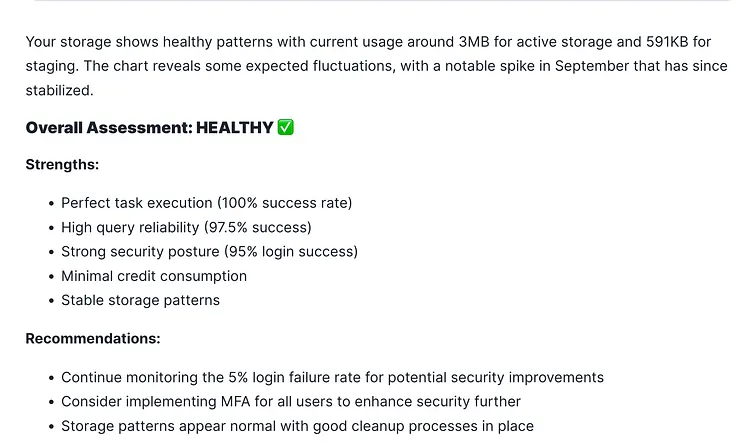

最后,我非常喜欢通过这个问题来生成健康报告:“我的 Snowflake 账户整体健康状况如何?”

如你所见,你可以利用传统 AI 智能体来保障和维护你的 Snowflake 部署环境,而这仅仅是冰山一角。你还可以利用相同的语义视图来生成仪表盘。

结论:超越 SQL 管理

在 Snowflake 的账户使用(Account Usage)架构上实现语义视图和通用 AI 智能体(Generalist AI Agent),标志着我们在处理、保护和优化数据基础设施方式上的重大转型。我们已经从单纯依赖手动 SQL 查询和静态仪表盘,过渡到了由对话式 AI 运维(Conversational AI Maintenance)主导的新时代。

这种新模式使用户能够通过与平台的聊天交互,轻松诊断问题、监控成本并审计安全。这个通用智能体拥有对 20 个账户使用表和 94 个指标的全面概览,在提供跨域洞察方面尤为出色——例如,它能将高昂的查询成本与安全漏洞挂钩,或将存储空间膨胀与性能问题联系起来。

虽然我才刚刚开始通过快速测试来探索成本、安全和治理,但核心结论已经非常明确:事实证明,语义视图不仅是 AI 智能体的得力工具,对传统的 BI/SQL 应用同样极具价值。通过利用 Snowflake Intelligence,企业可以实现更直观、更主动且更高效的 Snowflake 部署,在保持全面业务能力的同时,有效管理成本和风险。

逻辑上的下一步将是通过实施一套稳健的智能体监控与评估框架来增强这项工作,从而持续衡量智能体随时间推移的表现、准确性和价值。

点击链接立即报名注册:Ascent - Snowflake Platform Training - China